|

Advanced Computing and EDA (ACE) Lab at SJTU |

| HomePeopleResearchPublicationsTeachingActivitiesCodes

& Links |

| Approximate Computing |

|||||

|

Three

important goals of VLSI design are reducing circuit

area, improving circuit frequency, and reducing power

consumption, all of which are achieved under the basic

assumption that the circuit correctly implements the

specified function. However, many applications widely

used today, such as signal processing, pattern

recognition, and machine learning, do not require

perfect computation. Instead, results with small

errors are still acceptable. A new design paradigm,

known as approximate computing,

is recently proposed to design circuits for those

error-tolerant applications. Exploiting the error

tolerance of applications, it deliberately sacrifices

a small amount of accuracy to achieve improvement in

area, performance, and power consumption.

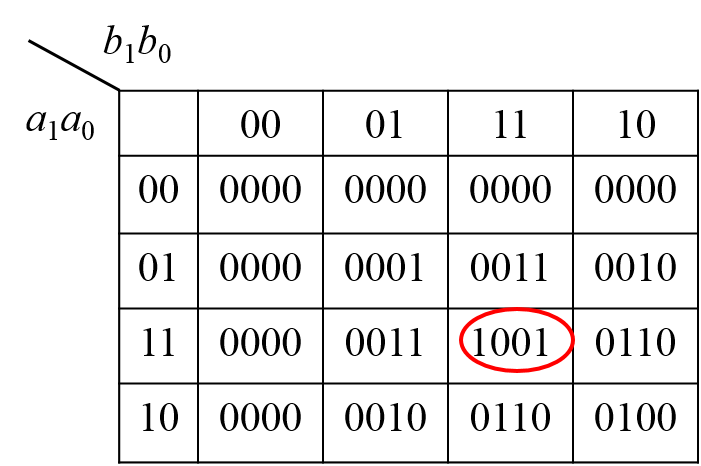

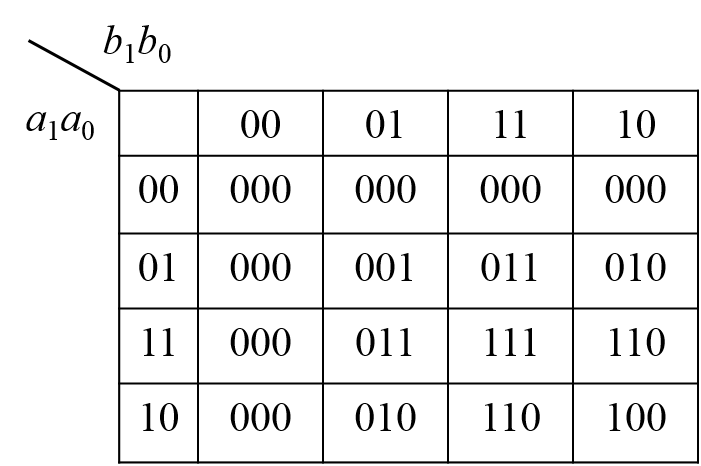

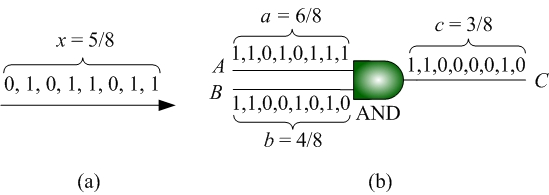

An

example of approximate computing is shown in Fig. 1.

Fig. 1(a) shows the Karnaugh map of an accurate 2-bit

multiplier. If we change the output "1001" in the red

circle in Fig. 1(a) to "111", we obtain the Karnaugh

map of an approximate 2-bit multiplier, as shown in

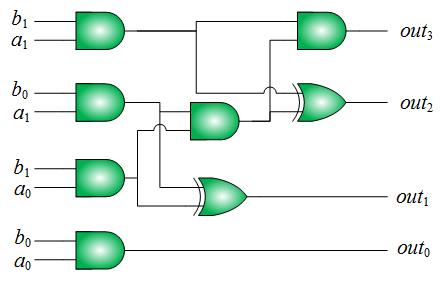

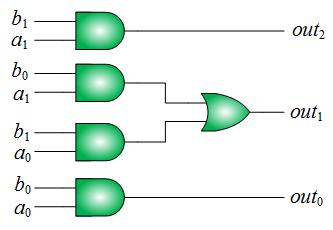

Fig. 1(b). The circuit for the accurate multiplier and

that for the approximate multiplier are shown in Fig.

1(c) and 1(d), respectively. Notice that by

introducing a small amount of inaccuracy, we reduce

both the area and the delay of the original multiplier

significantly.

|

| Selected Publications on Approximate Logic Synthesis |

|

| Selected Publications on the Design, Synthesis, and Analysis of Approximate Arithmetic Circuits |

|

| Selected Publications on the Design of Approximate Computing Architectures |

|

|

|

| Stochastic Computing |

|

|

Traditional

arithmetic circuits operate on numbers encoded by

binary radix, which is a deterministic way to

represent numerical values with zeros and ones.

Fundamentally different from the binary radix,

stochastic encoding is another way to represent

numerical values by logical zeros and ones. In

such a encoding, a real value p in the unit

interval is represented by a sequence of N random

bits X1, X2, ..., XN,

with each Xi having

probability p of being one and probability (1-p)

of being zero, i.e., P(Xi

= 1) = p and P(Xi

= 0) = 1-p. Fig. 2(a) shows an example of a

stochastic bit stream encoding the value 5/8.

Since

the random sequences are composed of binary digits, we

can apply digital circuits to process them. Thus,

instead of mapping Boolean values into Boolean values,

a digital circuit now maps real probability values

into real probability values. We refer to this type of

computation as stochastic

computing. Fig. 2(b) illustrates an AND gate

performing multiplication on stochastic bit streams.

We study the following fundamental problems related to stochastic computing:

|

| Selected Publications on the Synthesis of Stochastic Computing Circuits |

|

| Selected Publications on the Generation of Stochastic Bit Streams |

|

| Selected Publications on Architectures and Applications of Stochastic Computing |

|

|

|

| In-Memory Computing |

Traditional von Neumann

architecture requires data movement between the

processor and the memory, which causes a bottleneck in

performance and energy efficiency known as the "memory

wall". To address this problem, in-memory computing

(IMC) paradigm has been proposed. It explores

opportunities to compute within the memory so that the

amount of data movement can be significantly reduced. We study the

following topics related to IMC:

|

| Selected Publications on Hardware/Software Co-Design for Reliable Analog IMC |

|

| Selected Publications on Synthesis and Scheduling for Digital IMC |

|

|

|

| Electronic Design

Automation for VLSI |

|

We are

also interested in electronic design automation (EDA)

for VLSI. Topics include:

|

| Selected Publications |

|

|

|